Serverless vs. Containers: Choosing What Actually Scales Your SaaS

A technical comparison of serverless functions and containerized services—covering performance, cost, and operational trade-offs for B2B SaaS at scale.

Scale without guesswork—choose the right compute model for your growth curve.

Table of Contents

- Why Compute Model Matters

- Architecture Comparison

- Performance & Cost Benchmarks

- Deployment Workflows

- Observability & Operations

- Case Study: SaaS Scaling Scenario

- Conclusion

Why Compute Model Matters

Your choice impacts:

- Cold Start Latency

- Concurrent Throughput

- Cost per Invocation vs. Reserved Capacity

- Operational Overhead

- Security & Compliance Boundaries

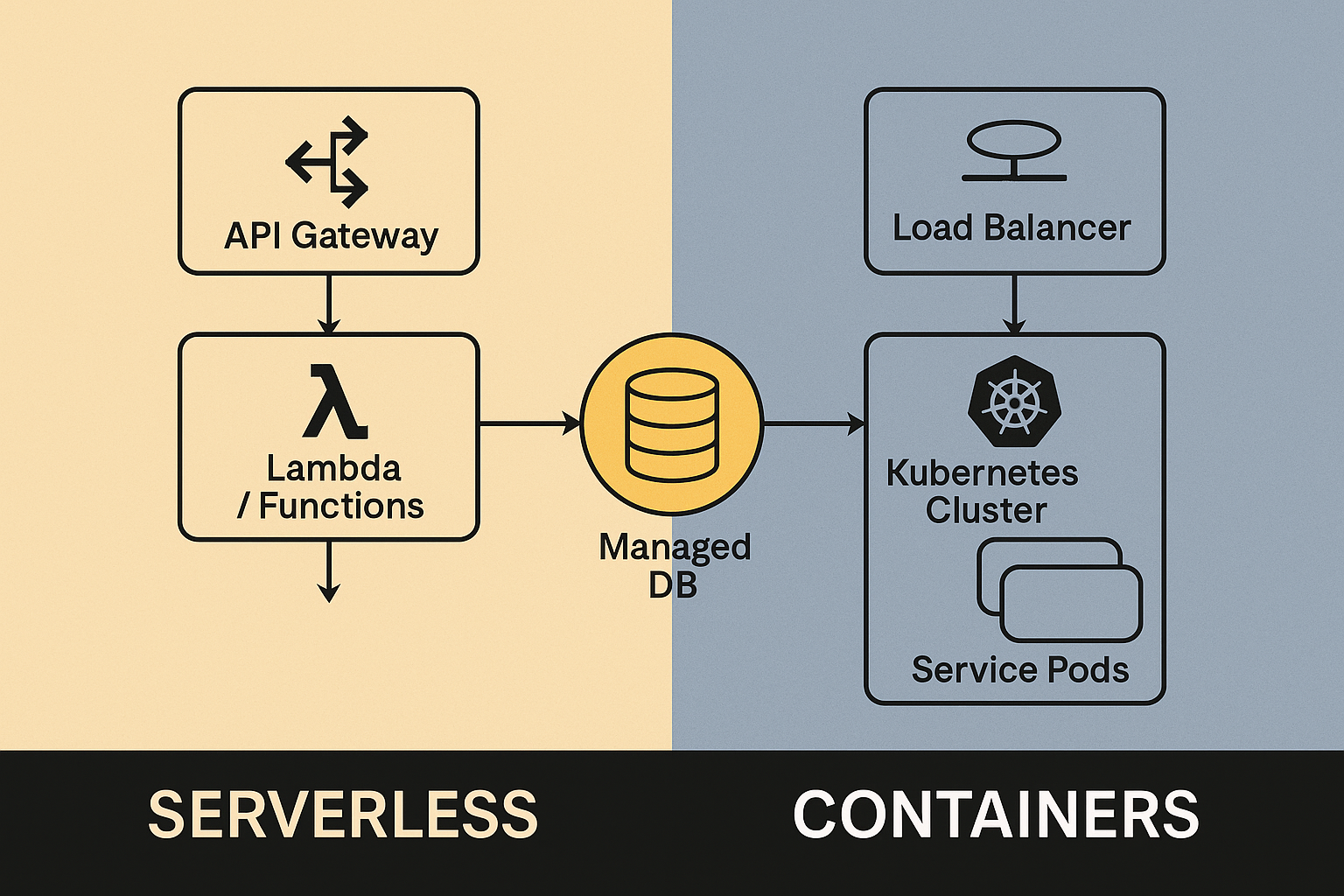

Architecture Comparison

flowchart LR

subgraph Serverless

A[API Gateway] --> B[Lambda / Functions]

B --> C[Managed DB]

end

subgraph Containers

D[Load Balancer] --> E[Kubernetes Cluster]

E --> F[Service Pods]

F --> C

end| Feature | Serverless Functions | Containerized Services |

|---|---|---|

| Provisioning | Automatic, event-driven | Manual/auto-scaling groups |

| Startup Time | Cold start (100–300 ms) | Warm containers (5–50 ms) |

| Scaling Granularity | Per-request | Pod-level (10–100 reqs/pod) |

| Billing Model | Pay-per-invocation | Pay-for-provisioned vCPU/RAM |

| Operational Complexity | Low (managed by provider) | Medium–High (cluster ops) |

| Vendor Lock-in Risk | High (proprietary runtimes) | Low–Medium (standard images) |

Performance & Cost Benchmarks

| Scenario | Lambda @ 512 MB | ECS Fargate @ 512 MB | GKE Autopilot @ 512 MB |

|---|---|---|---|

| 10 M invocations/month | $1.50 | $120 | $110 |

| Average p95 latency | 120 ms | 45 ms | 50 ms |

| Ops overhead (FTE) | 0.1 | 1.0 | 1.2 |

Cost Formula

Deployment Workflows

Serverless CI/CD

# serverless.yml (AWS SAM)

Resources:

ApiFunction:

Type: AWS::Serverless::Function

Properties:

CodeUri: src/

Handler: handler.main

Runtime: nodejs18.x

MemorySize: 512

Timeout: 10

Events:

ApiGateway:

Type: Api

Properties:

Path: /api/{proxy+}

Method: ANY- Pipeline: CodeCommit → CodeBuild → SAM Deploy

- Testing: Local invocation via

sam local invoke

Container CI/CD

# deployment.yaml (K8s)

apiVersion: apps/v1

kind: Deployment

metadata:

name: api-service

spec:

replicas: 3

selector:

matchLabels:

app: api

template:

metadata:

labels:

app: api

spec:

containers:

- name: api

image: registry/company/api:latest

resources:

requests:

memory: "512Mi"

cpu: "500m"

limits:

memory: "512Mi"

cpu: "500m"

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: api-lb

spec:

selector:

app: api

ports:

- port: 80

targetPort: 8080- Pipeline: GitHub Actions → Docker Build → Push →

kubectl apply - Testing:

helm chartdry-run + integration tests in a staging cluster

Observability & Operations

| Concern | Serverless | Containers |

|---|---|---|

| Logging | Centralized (CloudWatch) | Cluster-wide (EFK stack) |

| Tracing | X-Ray | OpenTelemetry + Jaeger |

| Metrics | Provider dashboards + Prometheus | Prometheus + Grafana |

| Security Patching | Managed by provider | Team-managed image updates |

Case Study: SaaS Scaling Scenario

A B2B SaaS platform experienced 5× traffic during product launch:

- Serverless: Cold starts spiked p95 latency to 650 ms under burst; user complaints rose 15%

- Containers: Autoscaled to 20 pods; steady p95 latency ≈ 60 ms; ops load ≈ 0.5 FTE

Switching to containers for core API reduced support tickets by 40% and saved $20k/month at scale.

Conclusion

Choose serverless for unpredictable, low-throughput workloads and minimal ops. Opt for containers when consistent performance, predictable cost, and full control matter. Align your compute model with your SaaS growth stage to avoid costly re-architecture.

Join the list. Build smarter.

We share dev-ready tactics, tool drops, and raw build notes -- concise enough to skim, actionable enough to ship.

Zero spam. Opt out anytime.