Prototyping AI Features: When to Fake It, When to Build It for Real

A technical guide on rapid prototyping AI-driven features—balancing realism, speed, and feasibility to validate ideas before investing in full-scale models.

AI prototypes shouldn’t answer, “Can we build this?” but “Should we build this?”

Table of Contents

- The Cost of Premature AI Builds

- Fake It: Wizard of Oz Method

- Hybrid Prototyping Approach

- When to Go Full AI

- Prototype Validation Metrics

- Case Study: Smart Assistant

- Conclusion

The Cost of Premature AI Builds

Building a full AI feature before validation risks:

- High compute & training costs

- Lengthy dev cycles (months, not weeks)

- Early model drift and maintenance overhead

Validate market fit first with cheaper, faster methods.

Fake It: Wizard of Oz Method

Humans simulate AI behind-the-scenes to test user reactions:

- Use Case: Complex NLP tasks (summarization, customer support)

- Approach: Human operators mimic AI output; user unaware.

flowchart LR

User[User Interface] --> Operator[Human Operator]

Operator -->|Real-time responses| UserPros:

- Immediate results; no tech debt.

- User-driven refinements; precise feedback loop.

Cons:

- Labor-intensive; not scalable long-term.

Hybrid Prototyping Approach

Blend low-fidelity AI with manual intervention:

- Use Case: Data extraction, search relevance testing.

- Approach: Basic LLM or heuristic; fallback to human for edge cases.

flowchart LR

User --> SimpleAI[Simple Model / Heuristic]

SimpleAI -->|Fallback| Operator

Operator --> User

SimpleAI -->|Standard responses| UserExample stack: GPT-4o API for initial responses + human fallback via Slack integration.

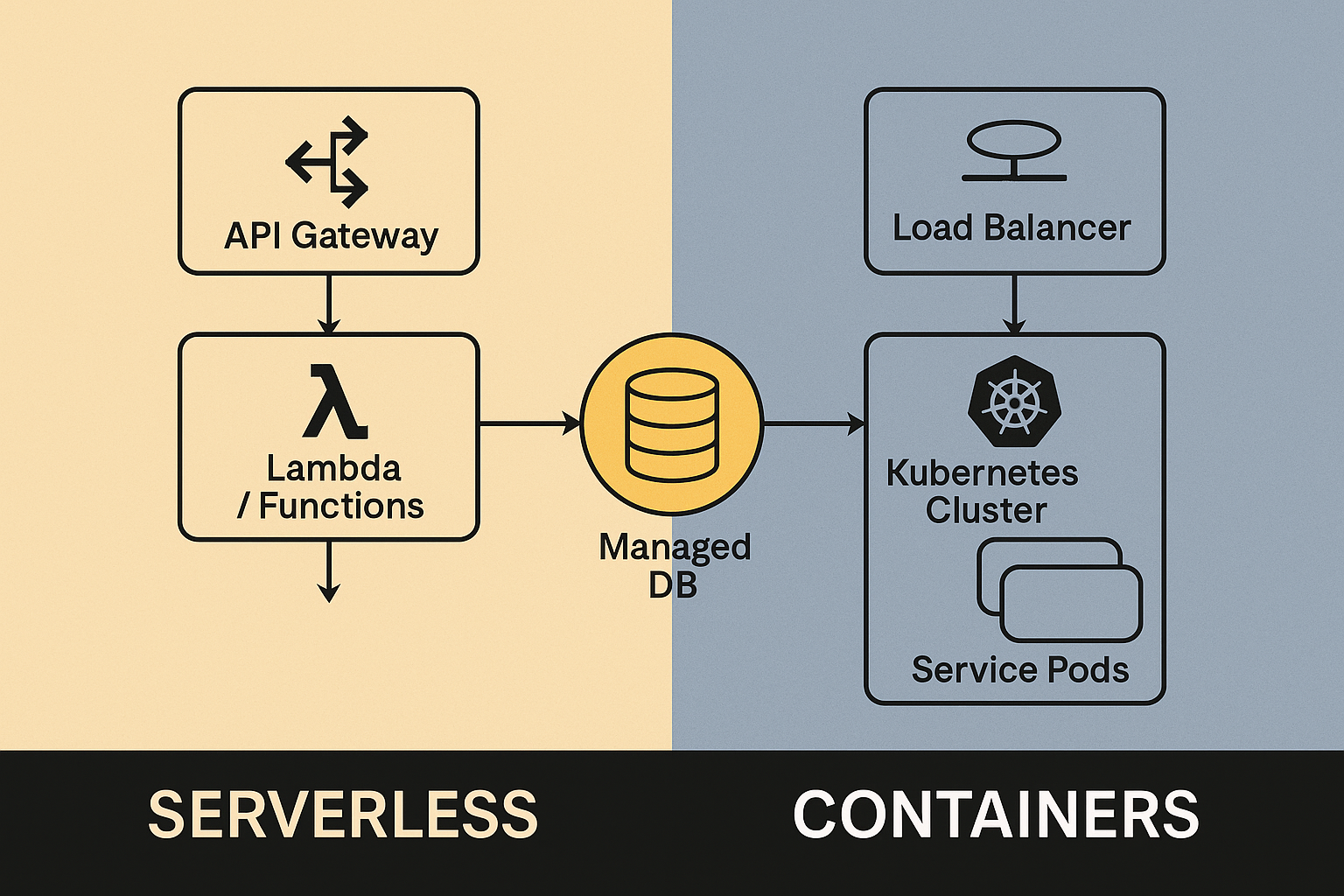

When to Go Full AI

Criteria to fully automate your AI feature:

- User Validation: Prototype has strong adoption signals (≥ 70% positive feedback).

- Data Availability: Sufficient high-quality data to train/refine models.

- Operational ROI: Clear savings (time/cost) from automation at scale.

| Criteria | Fake (Wizard of Oz) | Hybrid Approach | Full AI Automation |

|---|---|---|---|

| Validation speed | Days | Weeks | Months |

| Scalability | Low | Medium | High |

| Data requirement | Minimal | Moderate | Significant |

Prototype Validation Metrics

| Metric | Definition | Tooling |

|---|---|---|

| Task Success Rate | % completion of AI-assisted tasks | Amplitude, Mixpanel |

| User Satisfaction (CSAT) | User-rated satisfaction (1–5 scale) | Typeform, Delighted |

| Time Savings | Avg. minutes saved per task | Analytics logging |

| False Positive Rate | % of incorrect or harmful suggestions | Manual review, feedback loop |

Case Study: Smart Assistant

Prototype scenario: Customer support assistant providing automated replies.

-

Wizard of Oz:

- Human agent mimicked GPT-4o output.

- Validation: 80% satisfaction, task completion <2 mins.

-

Hybrid Stage:

- Automated draft with GPT-4o; human validation for edge cases.

- Validation: Task time ↓40%, 90% accuracy.

-

Production Launch:

- Fully automated responses with guardrails.

- Outcomes: CSAT 4.5, human intervention ↓70%.

Conclusion

Prototyping AI features incrementally—starting with human-driven “fake AI,” progressing to hybrid, then automating—balances speed, cost, and validation. Only invest heavily in AI models after user feedback confirms value.

Join the list. Build smarter.

We share dev-ready tactics, tool drops, and raw build notes -- concise enough to skim, actionable enough to ship.

Zero spam. Opt out anytime.